Unveiling the Challenges of Large-Scale AI Model Training - A Deep Dive into Meta's Llama 3 Experience

Meta recently made headlines with the release of a study detailing the training process of its Llama 3 405B model. This massive undertaking involved a cluster of 16,384 Nvidia H100 80GB GPUs and spanned an impressive 54 days. However, the journey was not without its challenges, as the cluster experienced 419 unexpected component failures during this period. In this article, we will delve into the intricacies of large-scale AI model training, exploring the obstacles faced by Meta and the valuable lessons that can be gleaned from this experience.

The Llama 3 Model: A Brief Overview

Meta's Llama 3 model is a cutting-edge AI designed to process and generate human-like language. With 405 billion parameters, it is one of the largest and most complex models of its kind. The model's sheer size and sophistication necessitate massive computational resources, making the training process a significant undertaking.

The Training Process: A Glimpse into the Challenges

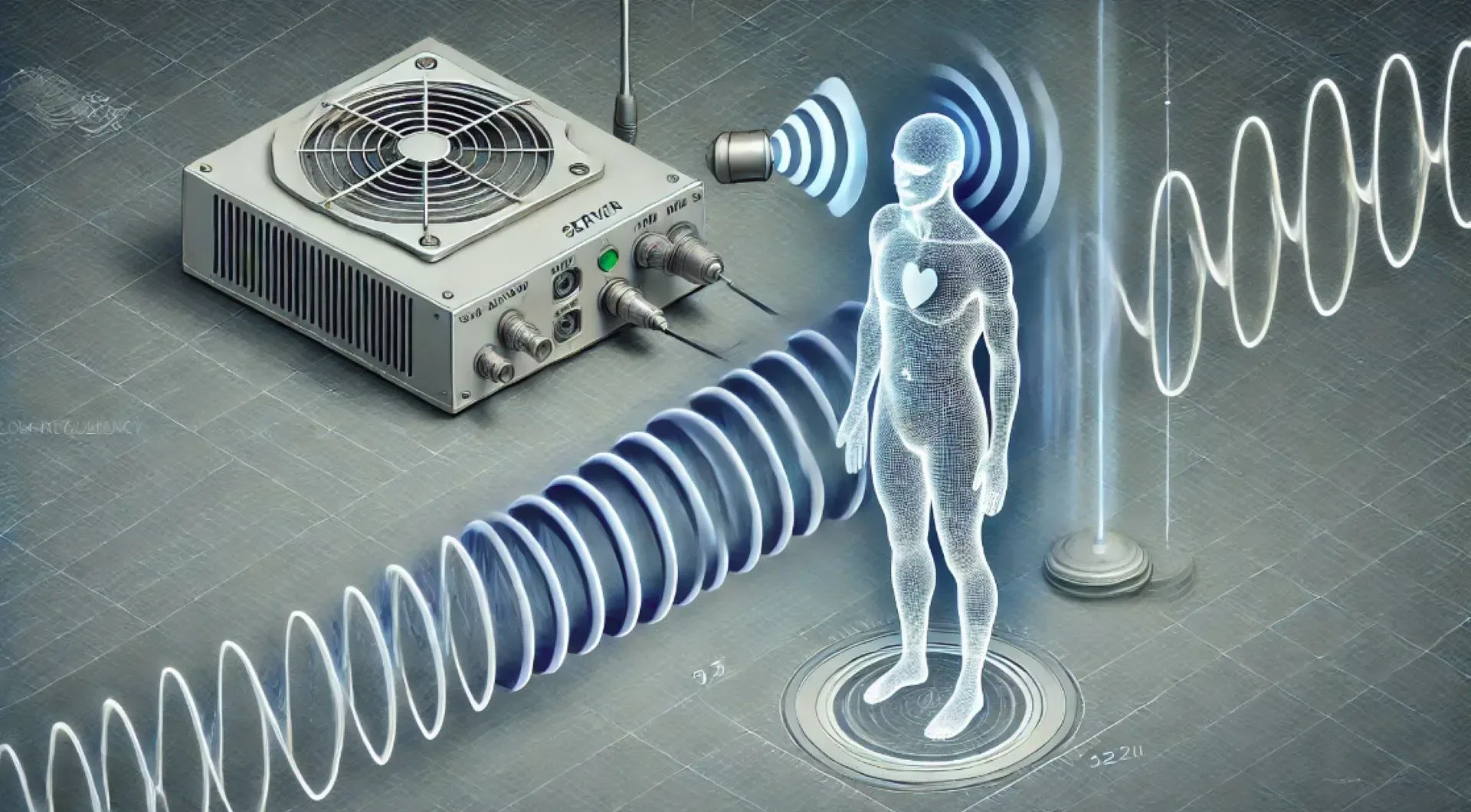

The training process for Llama 3 was a marathon effort, lasting 54 days and requiring the collective power of 16,384 Nvidia H100 80GB GPUs. This cluster of GPUs was carefully orchestrated to work in tandem, dividing the workload and enabling the model to learn from a vast dataset. However, the journey was not without its setbacks, as the cluster encountered 419 unexpected component failures during the training period.

Component Failures: A Major Hurdle

The 419 component failures experienced during the training process are a testament to the challenges of large-scale AI model training. In half of the failure cases, GPUs or their onboard HBM3 memory were to blame. This highlights the fragility of even the most advanced hardware when pushed to its limits. The failures averaged one every three hours, underscoring the need for robust monitoring and maintenance systems to mitigate the impact of such events.

Lessons Learned and Future Directions

Meta's experience with Llama 3 offers valuable insights into the challenges of large-scale AI model training. The importance of robust hardware, efficient monitoring systems, and contingency planning cannot be overstated. As AI models continue to grow in size and complexity, the need for innovative solutions to these challenges will only intensify. The future of AI research and development hinges on our ability to adapt and overcome the obstacles that lie ahead.

Conclusion: Navigating the Uncharted Territory of AI Model Training

In conclusion, Meta's Llama 3 model training experience serves as a poignant reminder of the challenges that lie at the forefront of AI research. As we continue to push the boundaries of what is possible, we must also acknowledge the obstacles that stand in our way. By embracing the lessons learned from this experience, we can forge a path forward, one that is marked by innovation, resilience, and a unwavering commitment to the advancement of AI.

Comments ()