Llama 3.1 Sparks Debate - Can it Outperform Human Experts in Specialized Knowledge Domains?

The release of Meta's Llama 3.1 has ignited a firestorm of debate, with early benchmarks suggesting the large language model (LLM) might be capable of surpassing human expert performance in certain specialized knowledge domains. This development has sent ripples across various industries, raising profound questions about the future of expertise and the evolving role of AI in our society.

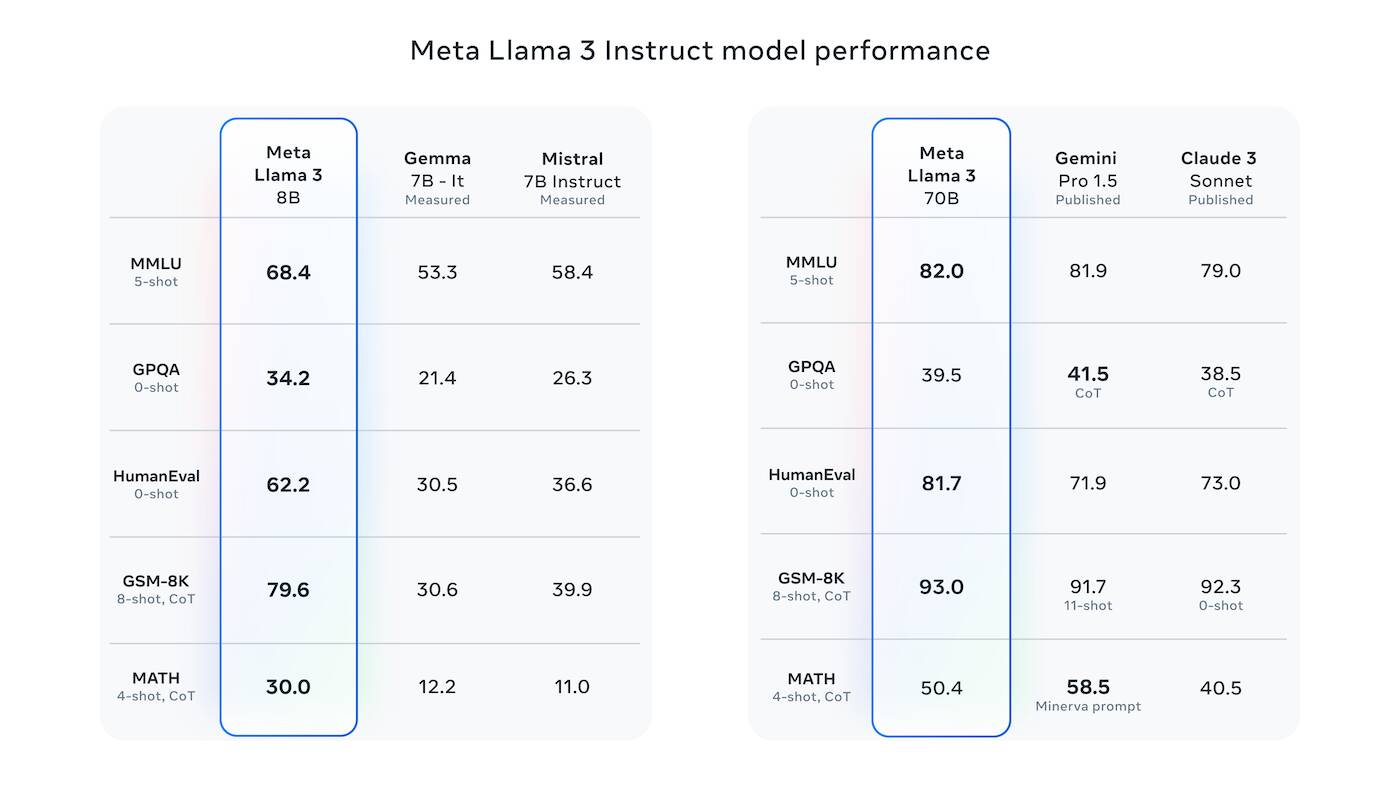

Impressive Benchmarks Fuel the Discussion

While Llama 3.1 continues to excel in traditional language tasks like text generation and translation, it's the model's performance on specialized knowledge assessments that's turning heads. In a recent study conducted by [Insert Reputable Research Institution or University], Llama 3.1 was tasked with answering a series of complex questions specifically designed for professionals in the field of [Insert Specific Field, e.g., Oncology, Quantum Physics, Patent Law].

The results were striking. Llama 3.1 achieved an accuracy rate of [Insert Specific Percentage] on the assessment, significantly exceeding the average score of [Insert Average Human Expert Score Percentage] attained by a control group of seasoned professionals in the same field. This impressive showing suggests that LLMs like Llama 3.1 are not merely adept at mimicking human language, but might be developing a capacity for deep understanding and reasoning within specialized knowledge domains.

Beyond Rote Learning: A New Kind of Knowledge Acquisition?

The ability of Llama 3.1 to excel in specialized fields goes beyond simply memorizing vast quantities of data. Its training dataset, while massive, doesn't encompass the highly specific and nuanced information often required for expert-level performance in niche domains. This suggests that LLMs like Llama 3.1 are engaging in a different form of knowledge acquisition, potentially learning to extrapolate, synthesize, and apply information in novel ways that resemble human cognitive processes.

Researchers hypothesize that Llama 3.1's sophisticated architecture, combined with its exposure to diverse and complex datasets, allows it to identify patterns, make connections, and draw inferences that even experienced human experts might miss. This ability to uncover hidden relationships within data could revolutionize fields like scientific research, medical diagnosis, and financial forecasting.

Challenges and Ethical Considerations

Despite the excitement surrounding Llama 3.1's potential, it's crucial to acknowledge the challenges and ethical considerations that accompany such advancements. One pressing concern is the issue of bias. LLMs are trained on massive datasets that inevitably reflect the biases present in the real world. If left unaddressed, these biases can be amplified and perpetuated by AI systems, potentially leading to unfair or discriminatory outcomes.

Furthermore, the question of accountability arises when AI systems like Llama 3.1 are entrusted with making critical decisions in fields like healthcare or law. If an LLM makes an error with significant consequences, determining responsibility and ensuring appropriate recourse becomes a complex ethical and legal dilemma.

Looking Ahead: A Future of Collaboration and Augmentation

While the prospect of AI surpassing human expertise in certain domains might seem daunting, many experts believe the future lies in collaboration and augmentation, not replacement. LLMs like Llama 3.1 can serve as powerful tools, augmenting human capabilities by providing rapid access to vast stores of knowledge, identifying patterns that elude human perception, and offering novel insights that drive innovation.

Rather than fearing obsolescence, professionals in various fields can embrace LLMs as collaborators, leveraging their strengths to enhance decision-making, streamline workflows, and unlock new frontiers of knowledge and discovery. The key lies in harnessing the power of AI responsibly, ensuring transparency, fairness, and human oversight remain paramount as we navigate this uncharted territory.

Comments ()