Decoding Google Crawler - Navigating the Search Engine's Heartbeat

The Pulse of Google Search: Understanding Crawlers

Google crawler, also known as Googlebot, is the backbone of the search engine's functionality. It plays a pivotal role in maintaining the search engine's colossal database of websites, ensuring that users receive the most relevant and up-to-date information.

What is Google Crawler?

Google crawler is an automated program designed to continuously scan and index websites, enabling Google to provide accurate search results. This process involves discovering new content, updating existing content, and removing outdated information.

How Google Crawler Works

The crawling process involves several key steps:

- Discovery: Googlebot discovers new websites and pages through links from existing sites, sitemaps, and submissions.

- Crawling: The crawler scans the discovered content, analyzing its relevance, quality, and structure.

- Indexing: The crawled content is then indexed, making it searchable through Google's database.

The Significance of Google Crawler

The continuous scanning and indexing of websites enable Google to provide relevant and accurate information to users. This process has several benefits:

- Improved search results: Users receive the most up-to-date and relevant information.

- Increased website visibility: Websites are more likely to appear in search results, driving traffic and engagement.

- Competitive advantage: Websites with frequently updated content are more likely to outrank competitors.

Optimizing for Google Crawler

To ensure optimal crawling and indexing, website owners can take several steps:

- Regularly update content to signal to Googlebot that the site is active.

- Implement a clear site structure and navigation.

- Use descriptive metadata and optimized images.

- Monitor crawl errors and resolve issues promptly.

By understanding the inner workings of Google crawler and optimizing accordingly, website owners can improve their online presence and provide users with a seamless search experience.

How Google Crawler Works: A Technical Insight

Google's crawler, also known as Googlebot, is the backbone of the search engine's functionality. It continuously scans and indexes websites to provide users with the most accurate and up-to-date information. But have you ever wondered how Googlebot works its magic? Let's dive into the technical aspects of Google's crawling mechanism.

Dynamic Crawling Frequency and Depth

Googlebot employs sophisticated algorithms to determine the frequency and depth of crawling. This decision-making process is influenced by various factors, including:

- Website updates: How frequently a website publishes new content or updates existing pages.

- User engagement: The level of interaction users have with a website, such as time spent on site, bounce rate, and click-through rate.

- Link equity: The quality and quantity of inbound links pointing to a website, indicating its authority and relevance.

Crawler Types: Desktop and Mobile

Googlebot uses two primary types of crawlers to simulate user experiences:

- Desktop crawlers: Mimic traditional desktop browser interactions to index desktop-specific content.

- Mobile crawlers: Emulate mobile browser interactions to index mobile-specific content and ensure a seamless user experience.

By understanding how Googlebot operates, web developers and SEO specialists can optimize their websites to improve crawl efficiency, increase visibility, and ultimately drive more traffic.

Google continuously refines its crawling algorithms to better serve users. Staying informed about these advancements enables web professionals to adapt and refine their strategies, ensuring their online presence remains robust and competitive.

Optimizing Your Website for Google Crawler To ensure your website ranks well in search engine results pages (SERPs), understanding how to optimize for Google's crawler is crucial. This section delves into the essential strategies for optimizing your website to effectively interact with Google's crawler. Streamlining Navigation and Site Structure

Efficient Crawling through Clear Site Architecture

Google's crawler, also known as Googlebot, navigates your website through links and site structures. Ensuring clear navigation and site structure is vital for efficient crawling. Implement the following strategies: Organize content logically using categories and subcategories. Utilize breadcrumb navigation for clearer hierarchy. Employ descriptive and concise URL structures. Regularly update and maintain internal linking. By streamlining your website's navigation and structure, you facilitate Googlebot's ability to crawl and index your content efficiently. Guiding Crawlers with Robots.txt Files

Avoiding Duplicate Content and Sensitive Pages

Robots.txt files play a critical role in guiding Google's crawler. These files instruct crawlers on which pages to crawl and which to ignore. Use robots.txt files to: Block duplicate content pages. Protect sensitive information (e.g., admin panels). Prevent crawling of unnecessary resources (e.g., CSS files). Ensure accurate and up-to-date robots.txt files. By leveraging robots.txt files effectively, you ensure Googlebot focuses on crawling relevant content. Leveraging Sitemap Submissions and Content Updates

Keeping Googlebot Informed and Engaged

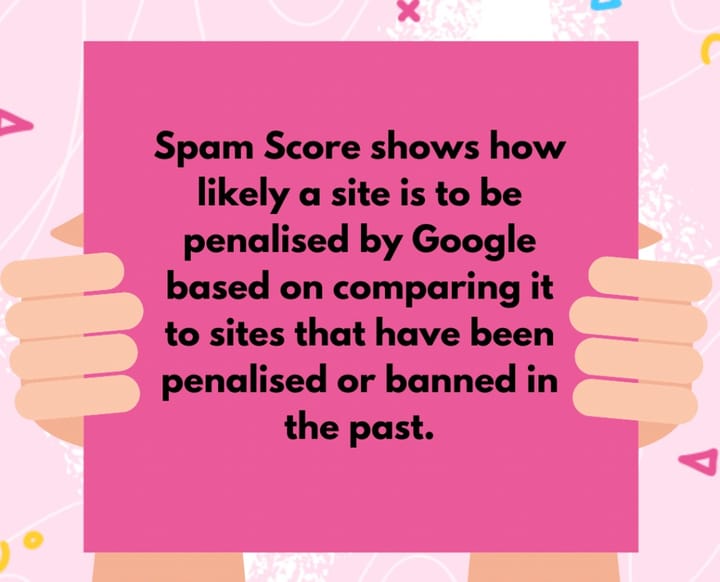

Sitemap submissions and regular content updates are vital for maintaining a healthy relationship with Google's crawler. Submit sitemaps to Google Search Console to: Inform Googlebot of new content. Update existing content. Ensure crawlability of dynamic content. Regularly update content to: Demonstrate site activity and relevance. Attract return visits from Googlebot. Improve indexing and ranking opportunities. By leveraging sitemap submissions and regular content updates, you keep Googlebot informed and engaged, ensuring optimal crawling and indexing.Common Google Crawler Issues and Solutions Google's crawler, also known as Googlebot, plays a vital role in indexing and ranking websites. However, issues can arise that hinder its ability to crawl and index your site efficiently. Understanding Crawl Errors Crawl errors occur when Googlebot encounters problems accessing or crawling your website's pages. These errors can stem from various sources, including: Server downtime or poor website maintenance Incorrect URL formatting or broken links Robots.txt file misconfiguration DNS resolution issues Identifying Crawl Errors To identify crawl errors, monitor Google Search Console regularly for crawl stats and error reports. This tool provides valuable insights into Googlebot's interactions with your website. Resolving Crawl Errors Resolving crawl errors promptly is crucial to maintaining optimal search visibility. Implement the following solutions: Server and Maintenance Issues: Ensure your server is consistently available, and schedule maintenance during off-peak hours. URL and Link Issues: Verify URL structures and fix broken links to facilitate smooth crawling. Robots.txt Optimization: Configure your robots.txt file correctly to guide Googlebot through your site. DNS Resolution: Resolve DNS issues promptly to prevent crawl errors. Best Practices for Smooth Crawling In addition to resolving crawl errors, follow these best practices to ensure smooth crawling: Regularly update your website's content and structure Use clear, descriptive URLs and meta tags Optimize images and videos for faster loading Leverage schema markup for enhanced search visibility Monitor website loading speed and optimize as needed By understanding and addressing common Google crawler issues, you can ensure your website remains optimized for search engines, maintaining and improving your online presence.

The Future of Google Crawler: Emerging Trends

As Google continues to refine its algorithms and improve search results, the Google Crawler remains at the forefront of innovation. In this section, we'll delve into the emerging trends that will shape the future of Google Crawler.

1. Google's Increasing Focus on Mobile-First Indexing

With the majority of web traffic originating from mobile devices, Google has shifted its focus towards mobile-first indexing. This means that Google Crawler will prioritize crawling and indexing mobile versions of websites over desktop versions. To adapt to this trend, website owners must ensure their mobile sites are optimized for speed, usability, and content.

This shift has significant implications for website design and development. Websites must now provide a seamless user experience across various mobile devices, with considerations for:

- Responsive design

- Fast page loading times

- Easy navigation and content accessibility

- Mobile-friendly content formats

2. Artificial Intelligence Integration for Smarter Crawling

Google is increasingly leveraging artificial intelligence (AI) to enhance the efficiency and effectiveness of its crawling capabilities. AI-powered crawling enables Google to:

- Identify and prioritize high-quality content

- Detect and avoid spam or low-value content

- Improve crawl frequency and depth

- Enhance overall search engine results page (SERP) relevance

This integration of AI will enable Google Crawler to better understand website content, structure, and user behavior, leading to more accurate search results.

3. Enhanced Emphasis on Website User Experience and Core Web Vitals

Google's growing focus on user experience and core web vitals signifies a critical shift in how websites are evaluated. Google Crawler will now assess websites based on metrics such as:

- Page loading speed ( Largest Contentful Paint)

- Interactivity (First Input Delay)

- Visual stability (Cumulative Layout Shift)

- Mobile responsiveness and accessibility

Websites that prioritize user experience and optimize for these core web vitals will likely see improved search engine rankings and increased crawl frequency.

In conclusion, the future of Google Crawler is shaped by these emerging trends. By understanding and adapting to these developments, website owners and developers can optimize their online presence, improve search engine rankings, and provide a better user experience.

Conclusion: Harnessing Google Crawler's Power Unlocking the Secrets to SEO Success

Mastering the Pulse of Google's Search Engine

In the vast digital landscape, understanding Google crawler's inner workings is crucial for SEO success. By deciphering the search engine's heartbeat, businesses and individuals can optimize their online presence, ensuring their content reaches the desired audience. In this conclusion, we'll summarize the key takeaways and provide actionable insights to harness Google crawler's power.

Key Takeaway 1: Understanding Google Crawler's Inner Workings

Google's algorithmic prowess relies heavily on its crawler's ability to scan, index, and retrieve web pages efficiently. By grasping how Googlebot operates, you can tailor your website to facilitate seamless crawling, indexing, and ranking. This synergy ensures your online content remains visible, relevant, and competitive.

Key Takeaway 2: Optimizing Your Website for Googlebot

To work in harmony with Googlebot, focus on: Clean Code: Ensure your website's structure and coding are organized and easily interpretable. Content Quality: Produce high-quality, engaging, and informative content. Mobile-Friendliness: Ensure a seamless user experience across devices. Page Speed: Optimize loading times to reduce bounce rates. XML Sitemaps: Facilitate easy navigation for Googlebot.

Key Takeaway 3: Staying Updated on Crawling Trends and Best Practices

The digital landscape is constantly evolving. Stay ahead of the curve by: Monitoring Google Updates: Keep pace with algorithmic changes and adjustments. Analyzing Crawling Patterns: Use tools like Google Search Console to track crawling activity. Industry Insights: Engage with SEO communities and experts to share knowledge. Adapting Strategies: Adjust your optimization tactics in response to emerging trends.

Capitalizing on Google Crawler's Power

By embracing these insights and adapting to Google crawler's dynamics, you'll unlock the full potential of your online presence. Remember: Quality Content: Remains the cornerstone of SEO success. Technical Optimization: Amplifies content visibility. Ongoing Improvement: Ensures long-term relevance. In conclusion, decoding Google crawler's heartbeat offers a competitive edge in the digital arena. By harmonizing your website with Googlebot and staying informed about the latest trends, you'll navigate the search engine landscape with confidence, driving your online success to new heights.

Comments ()