Beyond Google's Radar - Unraveling the Mystery of Unindexed Pages

The Discovery Paradox: When Google Finds But Doesn't Index

Have you ever wondered why Google discovers pages but doesn't index them? This phenomenon is known as the "Discovery Paradox." It's a common issue that website owners and SEO specialists face, and understanding its reasons can significantly improve website visibility and search engine ranking.

What is the Discovery Paradox?

The Discovery Paradox occurs when Google's crawlers find a webpage but don't add it to their massive index. This means that even though Google is aware of the page's existence, it won't appear in search results, leaving the page invisible to potential visitors.

Why does the Discovery Paradox happen?

There are several reasons why Google might discover a page but choose not to index it. Some of the most common reasons include:

- Low-quality content: If a page contains thin, duplicate, or low-quality content, Google might not deem it worthy of indexing.

- Lack of crawlable content: If a page is mostly images, videos, or JavaScript, Google's crawlers might struggle to extract meaningful content, leading to a lack of indexing.

- Technical issues: Website errors, slow loading speeds, or poor mobile responsiveness can prevent Google from indexing a page.

- Link equity: If a page lacks high-quality backlinks or has a poor internal linking structure, Google might not consider it important enough to index.

How to overcome the Discovery Paradox?

Fortunately, there are steps you can take to improve your website's visibility and increase the chances of Google indexing your pages:

- Create high-quality, engaging, and informative content that adds value to your audience.

- Ensure your website is technically sound, with fast loading speeds, proper mobile responsiveness, and clear internal linking.

- Build high-quality backlinks from authoritative sources to increase your website's link equity.

- Regularly monitor your website's crawl errors and address any technical issues promptly.

Quality Control: Google's Indexing Standards

Ensuring Relevant and Useful Content

Google's indexing process involves a rigorous quality control mechanism to ensure that only relevant and useful content is made available to searchers. Before indexing a page, Google assesses its quality, relevance, and usefulness. This evaluation process is crucial in maintaining the integrity and credibility of search results.

Factors Affecting Indexing

Several factors can influence Google's decision to index a page. These include:

- Content quality: Pages with low-quality content, thin content, or duplicate information may not be indexed.

- Relevance: Pages that are not relevant to users' search queries may be excluded from indexing.

- User experience: Pages with poor user experience, such as slow loading speeds or mobile usability issues, may not be indexed.

Google's Indexing Guidelines

Google provides clear guidelines for webmasters to ensure their pages meet the required standards for indexing. These guidelines emphasize the importance of:

- Creating high-quality, engaging, and informative content.

- Ensuring relevance and usefulness to searchers.

- Providing a positive user experience.

Conclusion

Google's indexing standards are designed to ensure that searchers receive relevant and useful results. By understanding these standards, webmasters can optimize their pages to meet the required quality and relevance thresholds, increasing their chances of being indexed and visible to searchers.

Technical Issues: The Hidden Barriers to Indexing

When it comes to indexing, technical issues can be a major roadblock. These hidden barriers can prevent search engines from crawling and indexing your pages, rendering them invisible to searchers. In this section, we'll delve into the technical problems that can hinder indexing and explore the importance of ensuring website technical health.

Crawl Errors: The First Hurdle

Crawl errors occur when search engines encounter issues while trying to access or crawl your website's pages. These errors can be caused by various factors, including:

- Broken links or dead pages

- Server errors or downtime

- Incorrect URL formatting

- Blocked URLs or pages

Resolving crawl errors is crucial, as they can significantly impact indexing and search engine ranking.

Poor Mobile Responsiveness: A Growing Concern

With the majority of searches now conducted on mobile devices, poor mobile responsiveness can be a significant barrier to indexing. If your website doesn't adapt to smaller screens or has a slow mobile loading speed, search engines may struggle to crawl and index your pages.

Slow Page Speed: The Silent Killer

Page speed is a critical factor in indexing and search engine ranking. Slow-loading pages can lead to:

- Higher bounce rates

- Lower engagement

- Reduced crawl frequency

Ensuring fast page speeds is essential for improving user experience and search engine performance.

Ensuring Website Technical Health

Regular website maintenance is vital for identifying and resolving technical issues. This includes:

- Monitoring crawl errors and resolving them promptly

- Ensuring mobile-friendliness and fast page speeds

- Regularly updating software and plugins

- Conducting technical SEO audits

By prioritizing website technical health, you can overcome hidden barriers to indexing and improve your online visibility.

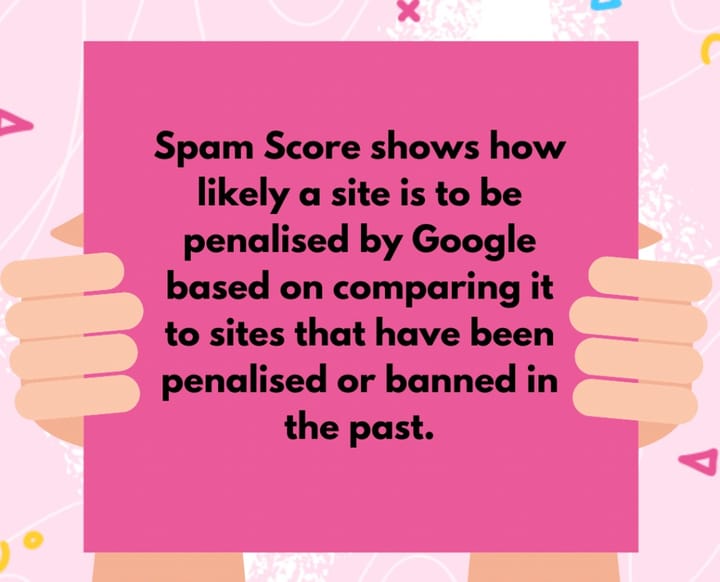

Content Saturation: When Google Says No to Duplicate Content

As we delve deeper into the realm of unindexed pages, it's essential to understand the significance of content saturation and its impact on search engine rankings. Google's algorithms are designed to filter out duplicate or similar content to maintain the quality of its index. This means that websites with unique and original content have a higher chance of being indexed and ranking higher in search engine results pages (SERPs).

Why Google Frowns Upon Duplicate Content

Duplicate content can lead to a poor user experience, as it provides little to no value to searchers. Google's primary goal is to offer relevant and diverse results, and duplicate content hinders this objective. By removing duplicate content, Google ensures that users receive the most accurate and useful information possible.

The Consequences of Content Saturation

Websites with high content saturation are likely to face penalties, including:

- Lower search engine rankings

- Reduced organic traffic

- Decreased credibility and trust

Breaking the Cycle of Content Saturation

To avoid the pitfalls of content saturation, focus on creating high-quality, engaging, and original content that resonates with your target audience. Conduct thorough research, and use tools like plagiarism checkers to ensure your content stands out from the crowd.

The Role of Internal Linking in Indexing

Internal linking plays a vital role in indexing, as it helps search engines like Google understand the structure and content hierarchy of a website. Proper internal linking enables search engines to discover new pages, understand the relationships between content, and improve the overall user experience.

How Internal Linking Affects Indexing

Internal linking has a direct impact on indexing, as it:

- Helps search engines discover new pages: By linking to new content from existing pages, search engines can quickly find and index new pages.

- Clarifies content hierarchy: Internal linking shows search engines the relationships between pages, helping them understand the content structure and prioritize indexing.

- Enhances user experience: Well-structured internal linking makes it easier for users to navigate the website, reducing bounce rates and improving engagement.

Best Practices for Internal Linking

To maximize the impact of internal linking on indexing, follow these best practices:

- Use descriptive anchor text: Use relevant and descriptive text for links, avoiding generic phrases like "Click here."

- Link to relevant content: Only link to content that adds value to the user experience and supports the content hierarchy.

- Use a clear linking strategy: Establish a consistent linking strategy to avoid confusing search engines or users.

- Monitor and update links: Regularly check for broken links and update them to maintain a smooth user experience and prevent indexing issues.

Conclusion

Internal linking is a crucial aspect of indexing, as it helps search engines understand website structure and content hierarchy. By implementing a clear internal linking strategy, website owners can improve indexing, user experience, and overall website visibility.

Beyond Indexing: Best Practices for Website Visibility

Ensuring your website is indexed is just the first step towards achieving optimal online visibility. To truly unravel the mystery of unindexed pages, it's crucial to delve deeper into the best practices that go beyond mere indexing.

Regularly Update Content to Keep Search Engines Crawling and Indexing

Search engines thrive on fresh and relevant content. Regularly updating your website's content not only keeps your audience engaged but also encourages search engines to crawl and index your pages more frequently. This can be achieved by:

- Adding new blog posts or articles

- Updating existing content to reflect changing trends or information

- Expanding product or service offerings

Monitor Website Technical Health and Address Issues Promptly

A technically sound website is essential for search engine crawlers to navigate and index pages efficiently. Keep a watchful eye on your website's technical health by:

- Regularly checking for broken links and fixing them

- Ensuring mobile-friendliness and page speed optimization

- Monitoring website uptime and addressing downtime issues

- Keeping software and plugins up-to-date

By implementing these best practices, you'll be well on your way to unraveling the mystery of unindexed pages and unlocking the full potential of your website's online visibility.

Comments ()